SIGMOD 2024 | M Kurmanji*, E Triantafillou, P Triantafillou

Deep Machine learning models based on neural networks (NNs) are enjoying ever-increasing attention in the Database (DB) community, both in research and practice.

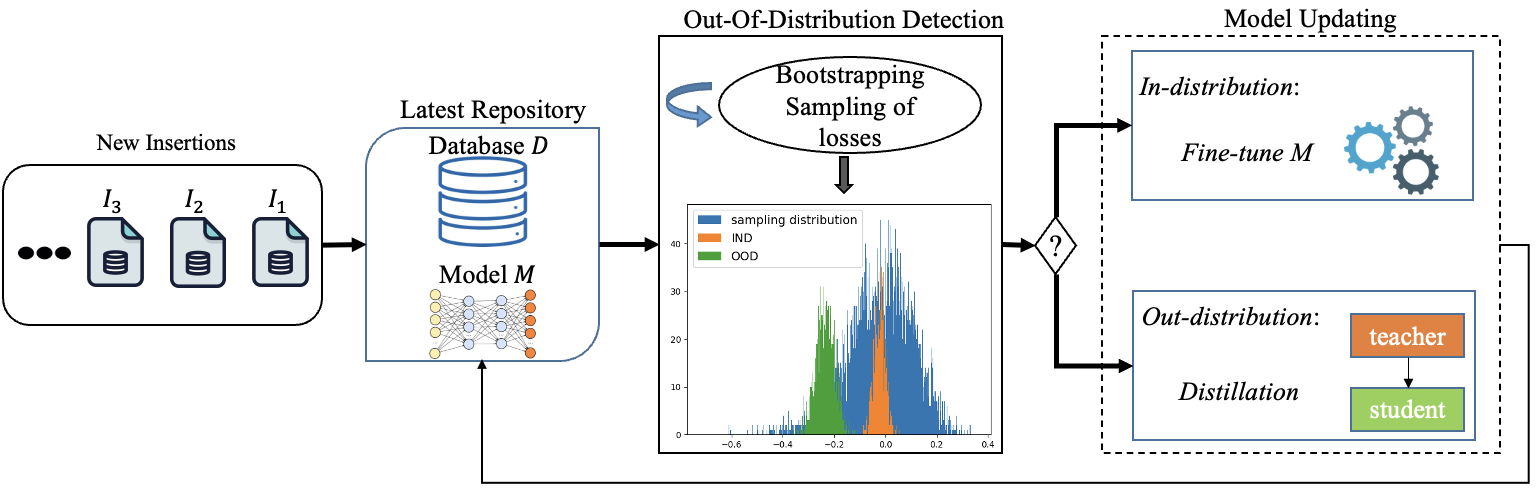

However, an important issue has been largely overlooked, namely the challenge of dealing with the inherent, highly dynamic nature of DBs, where data updates are fundamental, highly-frequent operations (unlike, for instance, in ML classification tasks).

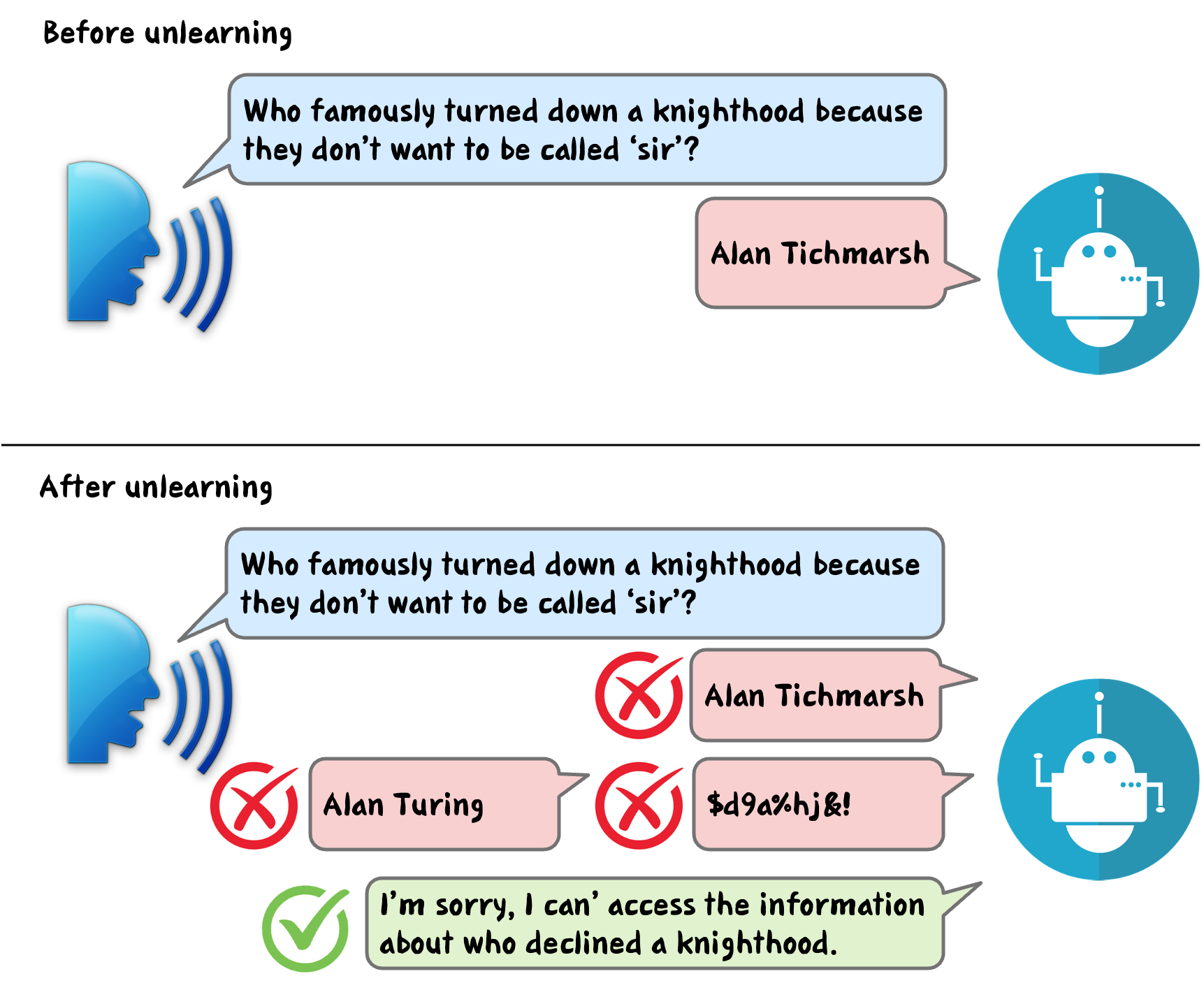

Although some recent research has addressed the issues of maintaining updated NN models in the presence of new data insertions, the effects of data deletions (a.k.a., "machine unlearning") remain a blind spot.

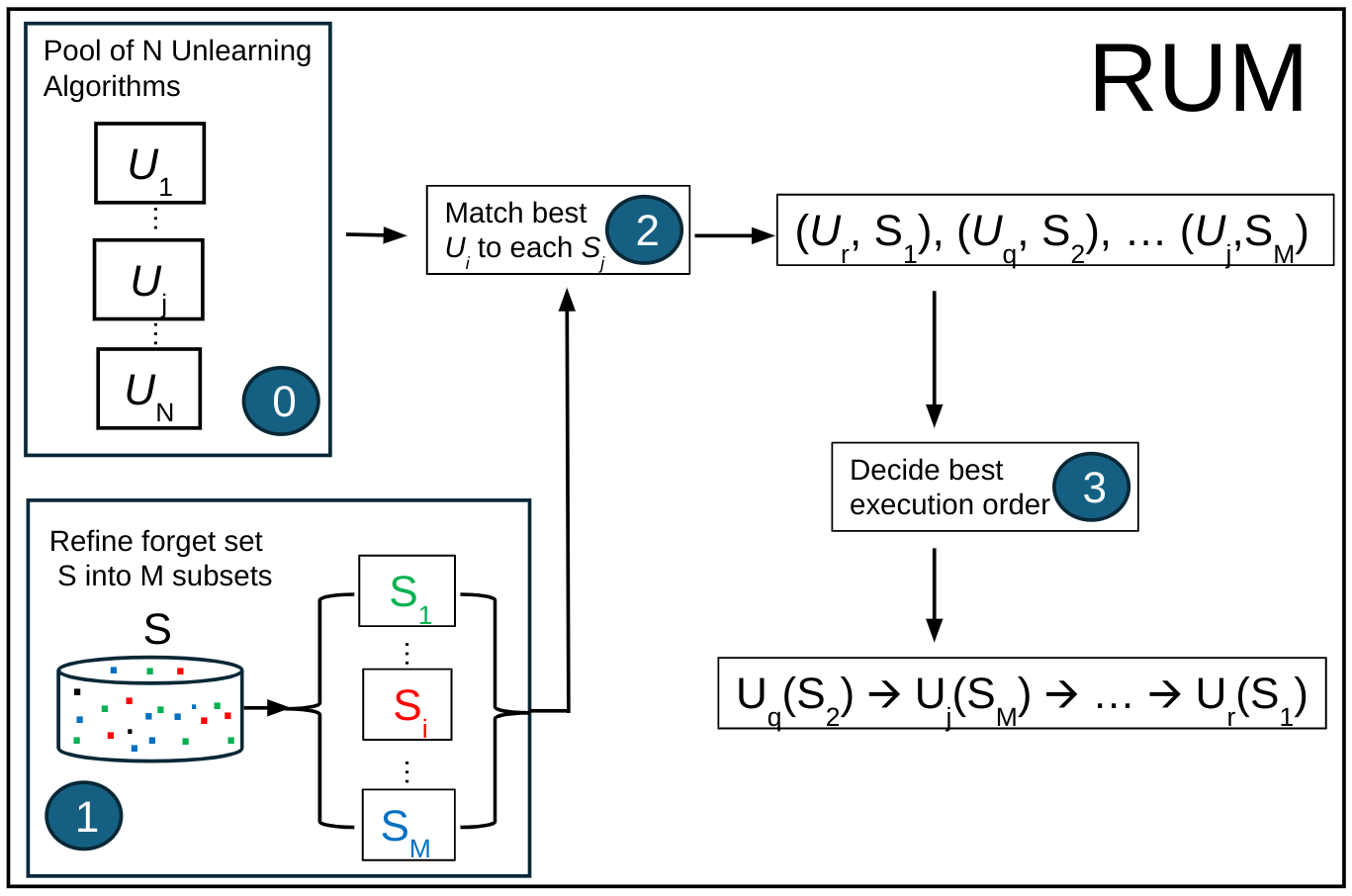

With this work, for the first time to our knowledge, we pose and answer the following key questions: What is the effect of unlearning algorithms on NN-based DB models?

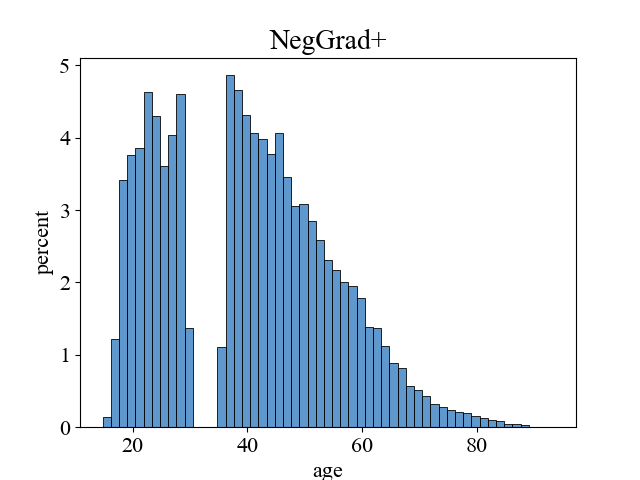

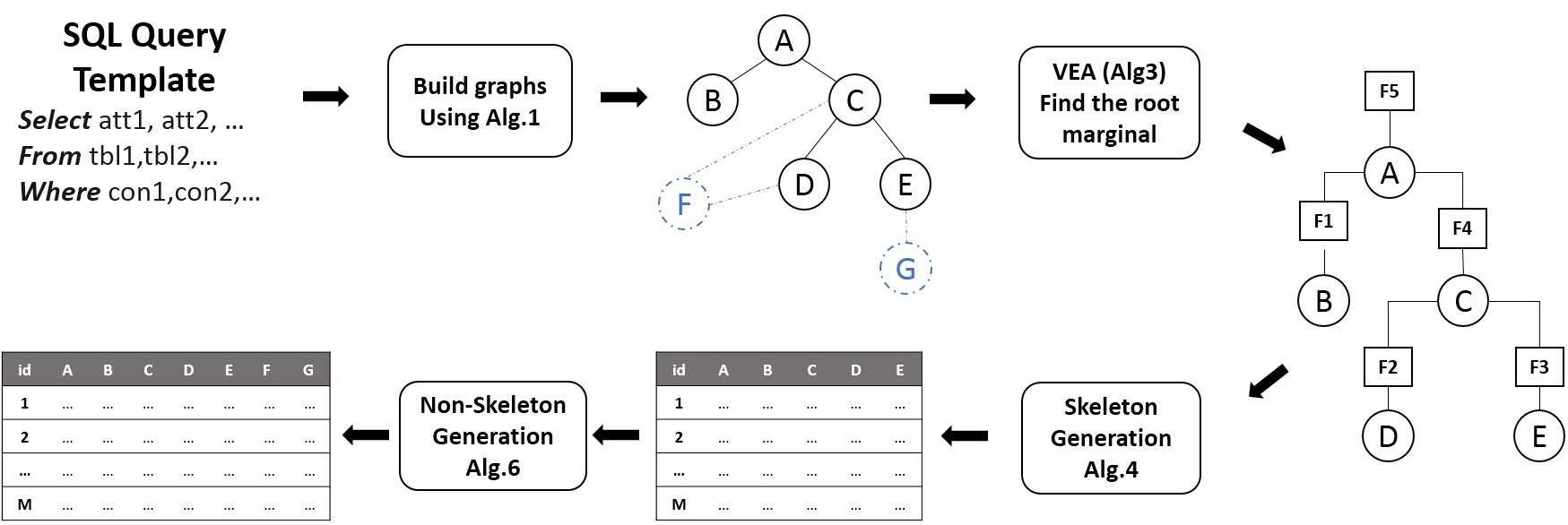

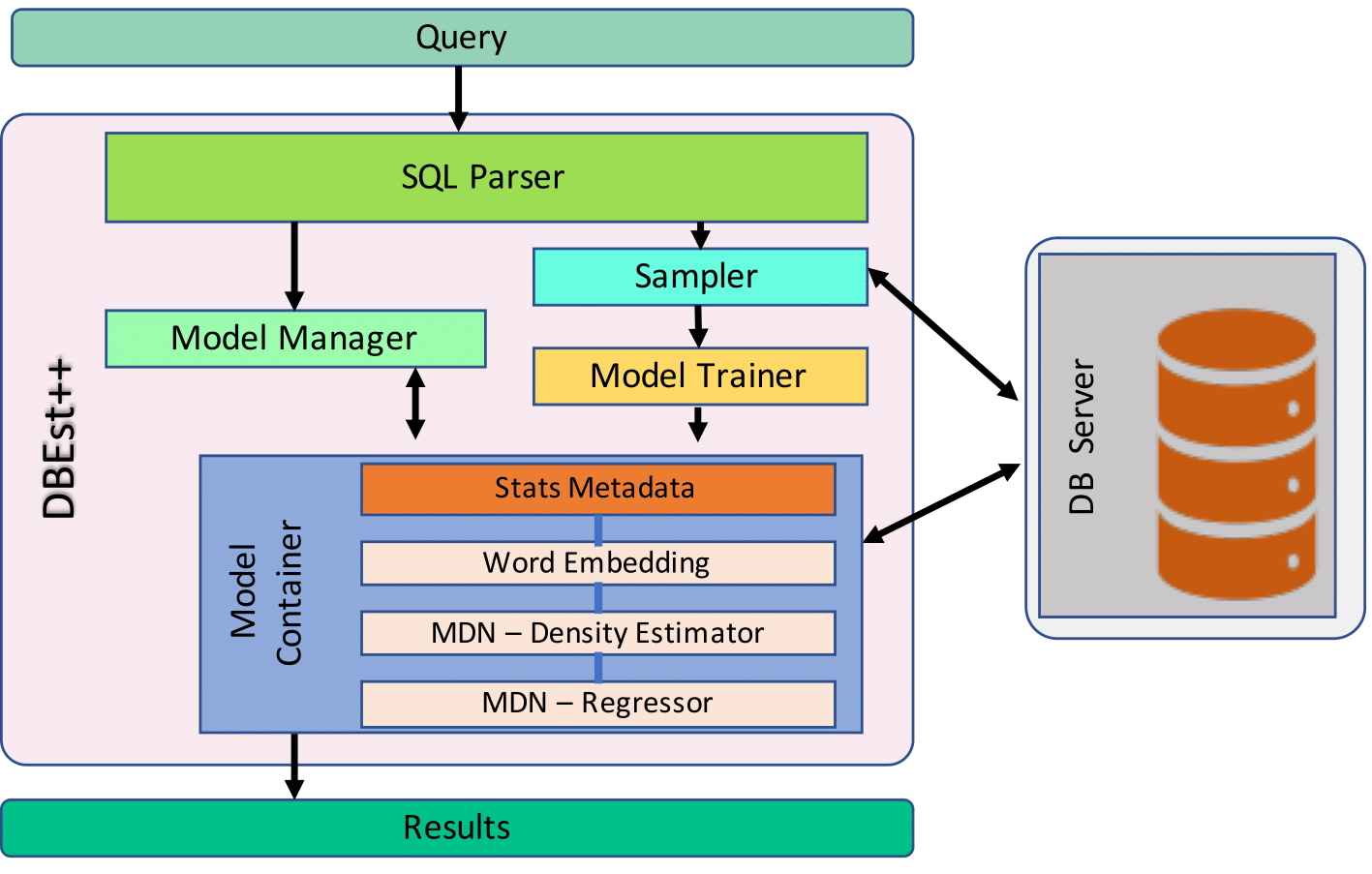

How do these effects translate to effects on key downstream DB tasks, such as cardinality/selectivity estimation (SE), approximate query processing (AQP), data generation (DG), and upstream tasks like data classification (DC)?

What metrics should we use to assess the impact and efficacy of unlearning algorithms in learned DBs?

Is the problem of (and solutions for) machine unlearning in DBs different from that of machine learning in DBs in the face of data insertions?

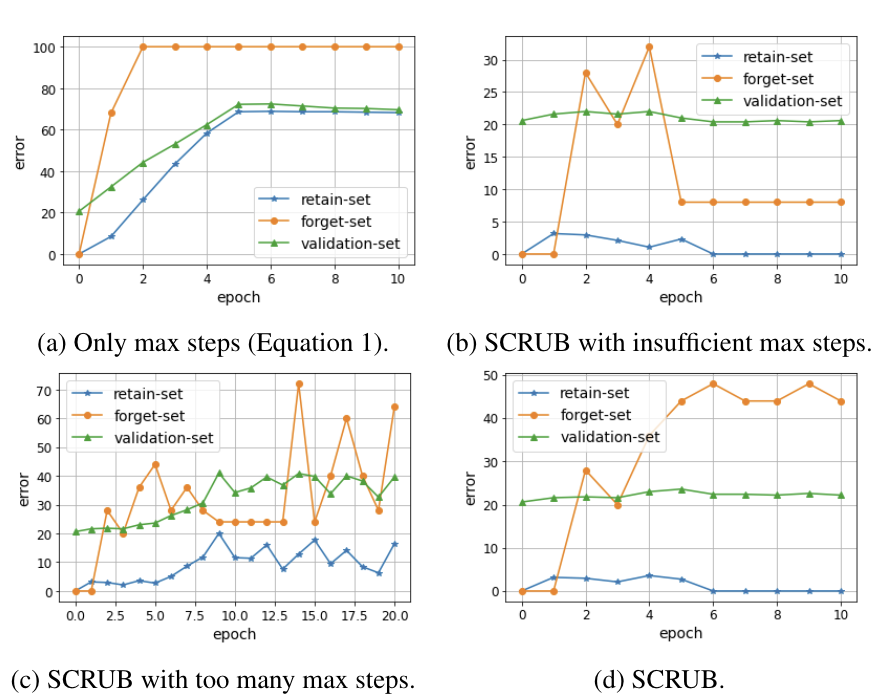

Is the problem of (and solutions for) machine unlearning for DBs different from unlearning in the ML literature?

what are the overhead and efficiency of unlearning algorithms (versus the naive solution of retraining from scratch)?

What is the sensitivity of unlearning on batching delete operations (in order to reduce model updating overheads)?

If we have a suitable unlearning algorithm (forgetting old knowledge), can we combine it with an algorithm handling data insertions (new knowledge) en route to solving the general adaptability/updatability requirement in learned DBs in the face of both data inserts and deletes?

We answer these questions using a comprehensive set of experiments, various unlearning algorithms, a variety of downstream DB tasks (such as SE, AQP, and DG), and an upstream task (DC), each with different NNs,

and using a variety of metrics (model-internal, and downstream-task specific) on a variety of real datasets, making this also a first key step towards a benchmark for learned DB unlearning.